Marketing BS: AI Language Models, Part 2: The Essay

Good morning everyone,

Last week, I published a briefing on the latest news in AI language processing.

Today’s essay explains how advanced language models might impact companies and the day-to-day lives of marketers.

In a future essay, I will share some perspectives about cost analyses of AI marketing tools, judgement, and future use cases.

—Edward

The implications of recent AI developments

Let’s start with the big question…

Is artificial intelligence the beginning of the end of the world?

This (anonymous) writer at LessWrong seems to think so. They argue that AI is more advanced than generally believed, so we should “pull the fire alarm” now — before AI becomes super-intelligent and (potentially) destroys human civilization. (They’ve since retracted the rashness of their thesis, but not their general concern about the dangers of AI.)

For a good primer about the AI Alignment question — “how do you design a super-intelligent machine without accidentally unleashing a catastrophe?” — I recommend this Matthew Yglesias newsletter (with analogies to The Terminator movies).

For more background, check out these posts from Astral Codex Ten:

Shiny new thing or game changer?

I have built my personal brand by calling BS on many (many!) marketing and technology trends. Ten years ago, I wrote an essay titled “Why your company should NOT use Big Data.” I outlined my belief that most companies should focus on marketing fundamentals, rather than getting distracted by the latest “hot” trends.

Isn’t AI just the latest “hot trend” that marketers would be best to ignore?

I’ve tested many tools that help marketers use AI to create blog content at scale, and I’ve been generally disappointed. Yes, you can generate a 1000-word blog post in just a minute or two, BUT you need to factor in additional time for quality control.

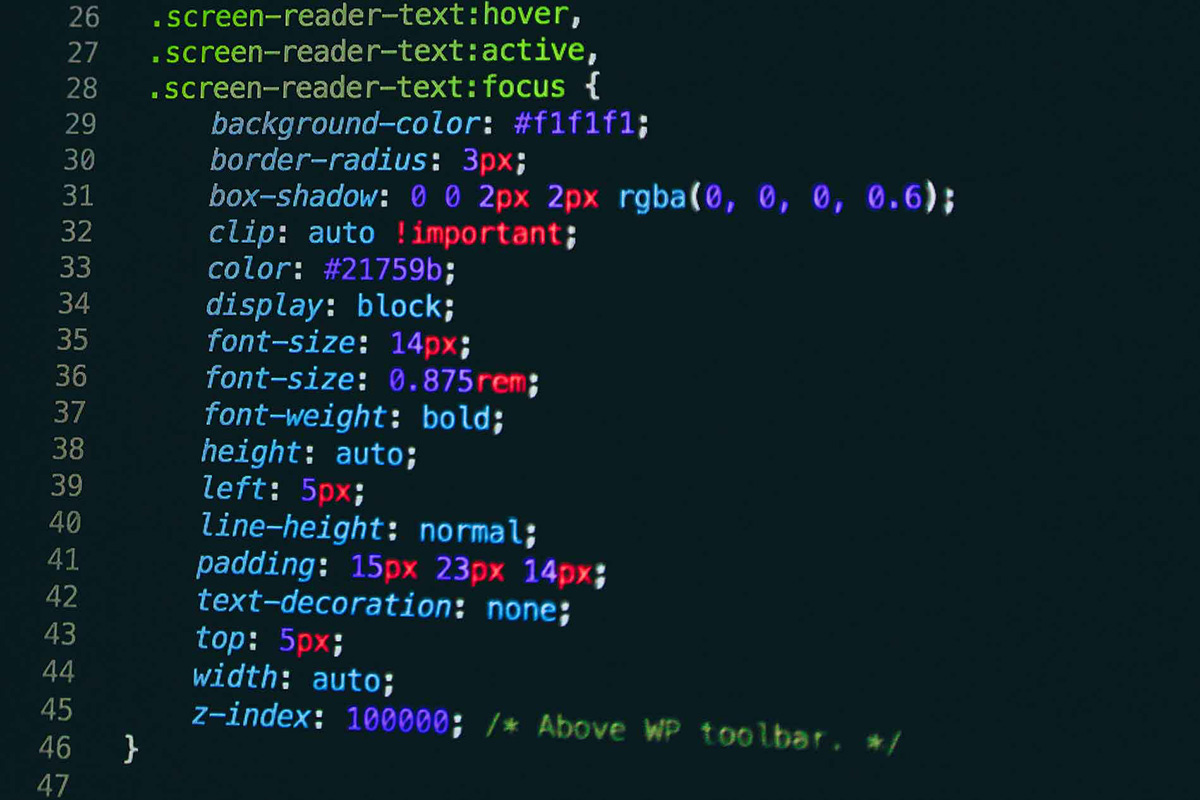

Multiple developer friends of mine now treat CoPilot (OpenAI’s code-creation tool) as an essential part of their workflow. When I asked one of them how often CoPilot gets it “right” on the first try, he replied that I was asking the wrong question — the auto-generated output was NEVER perfect. The more important measure, he said, was the percentage of code in each task that met his standard for quality — and right now, that’s sitting at about 80%. For instance, after prompting CoPilot, he still needs to review every line of auto-generated programming and then fix about 20% of the code. The role of the intelligent developer doesn’t go away; AI just makes the process more efficient.

Using GPT-3 to write text is like having CoPilot produce code — the AI will create content, but you still need a domain expert to review and edit 20% or more of the output. I’ve experimented with GPT-3 and find that the process isn’t necessarily faster, but can help as a “muse” to push things forward.

Here’s a practical example, from a novel that I’m working on: there’s a scene where the protagonist is depressed and looking up at the skyline. I felt stuck about describing the view, so I uploaded the chapter into an AI language tool. The process required a few attempts and edits, but it eventually produced a passage that I liked:

I stop and look at the Bellevue skyline and the Cascade mountains beyond. It’s pretty. The perfect image of the new American Dream. The gleaming glass and steel towers reach to the sky, as if begging for acceptance into heaven itself.

Those sentences not only matched the style of the chapter, but they were also more descriptive than anything I would have come up with on my own.

But the process wasn’t faster. The cycle of prompting, auto-generating, editing and repeating took longer than my usual pace for writing a few sentences. The QUALITY of the paragraph, though, was improved by the process.

In this next example, you can see how much of an AI-generated passage is edited by the human writer. I used the AI tool to create a description of a Vice Principal’s office. The bold parts were direct changes I made to the AI’s output:

The VP is sitting behind a lopsided desk which looks like someone hit it with a hammer. Some papers are stacked at the bottom left-hand corner of the desk. Atop the desk is a laptop computer open with some sort of filter that prevents my seeing what is on the screen. To the left is a sitting area with a couch, chair and coffee table. On top of the bookcase above the right-hand wall are three framed posters. Each poster is highlighting one of the school virtues: Courage, Wisdom and Change. The other walls are covered with pictures of famous people. I recognize Abraham Lincoln and George Washington. Then my eyes finally meet those of the Vice Principal…

The AI provided the structure for the paragraph and some interesting details. Roughly half of the auto-generated details did not make sense for my story, so I changed them manually — sometimes a little and sometimes a lot.

If you want to learn more about using AI to create website content, you could try something like GraphiAI — a platform that was trained on specialized B2B verticals. In theory, anyone could use Graphi to create blog posts about any category. In practice, I expect you would need an industry expert to double check the content.

Will the increase in your team’s content output be enough to justify the cost of AI services? I don’t know, but it might be worth a try.

I appreciate that I seem to be endorsing a still-unproven technology — an ironic position given that I opened this section talking about my track record of calling BS on the “shiny new thing” syndrome of the marketing industry.

Why do I think that GPT-3 could be more of the “real deal” than previous buzzworthy trends like Big Data, viral content and influencer marketing?

Two main reasons:

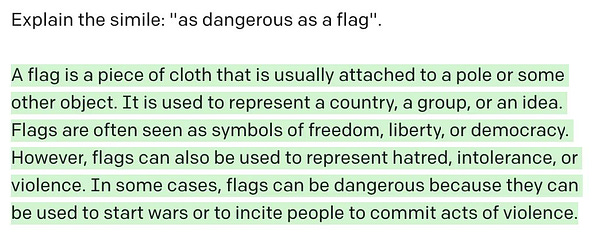

Even though AI is still in the early stages, there have been some amazing demonstrations of its capabilities.

The pace of innovation in the AI world is astonishing. Progress is happening so quickly that ideas that once seemed far-fetched might be realized within years, rather than decades.

In my next AI-focused essay, I will describe some use cases that we might see in the near future.

Is Google okay with this?

When I tell people that I’ve used AI to help me write a novel, they often raise the same issue: plagiarism. I understand that concern; after all, AI systems have read the entire internet and many books. How do we know they’re not just spitting out words that it collected from some other source? In my experience, plagiarism is very rare. When I encounter AI-generated text that sounds really good, I copy the text into Google and seldom find any matches.

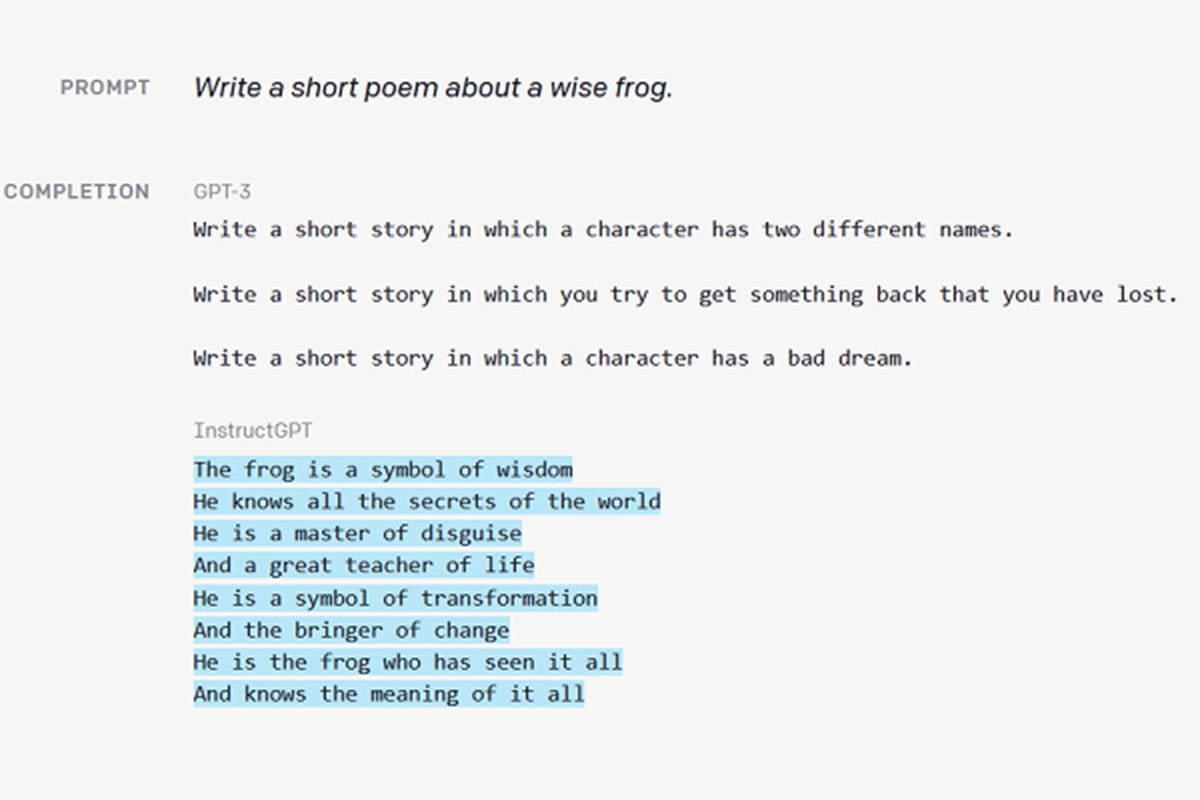

In this Twitter thread, OpenAI researcher Richard Ngo tries to show that GPT-3 can create original ideas:

Ngo continues with even weirder similes — many of which the AI describes better than I could explain — such as “that’s like thinking boats could be sunk by lettuce” and “the type of man who could lose the lottery while trapped in groundhog day.”

In addition to concerns about plagiarism, I hear a lot of questions about “what Google might say about AI?”

Let's suppose you can create thousands of pages of content in a fast and efficient way.

Will Google penalize you for generating this content with AI systems instead of the “old-fashioned” way (i.e., a team of human writers)?

Google SAYS you will be penalized. The company has implied that AI-generated content will be dealt with like spam. Kevin Indig, the Director of SEO at Shopify, suggests that “Google might treat AI content similar to backlinks.” (If Google identifies a pattern of blatant paid links to your company’s website, you might receive a warning notice; plus, you might experience a significant drop in traffic and keyword ranking.) So, if you create BAD content with AIs — like the auto-generated text that is not reviewed and edited by an experienced human — then expect Google to penalize you.

But if, on the other hand, your company implements an effective process for quality control, you could probably develop AI-created content that is indistinguishable from your human-produced content. In that case, Google would treat both types of content the same — with no penalties for using AI.

Even if Google REALLY WANTED to punish AI users, would they even be able to identify the auto-generated content?

Maybe. There is some evidence that current AI systems use a less-varied vocabulary than human-generated content. This tendency is a by-product of AI design. AIs work on a syllable-by-syllable basis; they evaluate the previous content and then predict the most likely option for the next syllable (and then repeat the process again and again). This syllable-by-syllable design helps string together coherent, well-written documents. But because AIs choose syllables that are “most likely” to appear, they are less likely to include unconventional — though appropriately used — words or sentence structures.

The average human probably couldn’t spot this difference, but a Google Robot that scans vocabulary distributions might be able to identify AI-generated versus human-written content. (Or at least human content that features a wide range of words and structures. I assume that some less inventive human writers will get flagged as “writing like an AI”).

Ultimately, though, if your company pushes forward with AI content, you are far more likely to face problems with “offensive” content than you will with Google search penalties.

More use cases TODAY

Apart from content writing, most of the use cases for AI language tools seem “cool,” but not very practical for a business. To go beyond content generation right now takes some creativity, but it’s not impossible. A friend of mine is using GPT-3 to read all of his company’s customer feedback. Most of the comments involve complaints or requests, but a small percentage — maybe just 1% — give unsolicited praise. The service reps who deal with the feedback are focused on the 99% of messages that require a prompt response to prevent customer dissatisfaction. Even when directed to collect praise, the service reps generally ignore positive comments (those employees are incentivized to hit metrics for dealing with customer calls, not gathering testimonials).

As an experimental solution, my friend turned to GPT-3. Fine-tuning the prompts took a lot of trial and error, but now he has a tool that reads through every piece of customer feedback and categorizes the comments as “negative,” “neutral,” or “positive.” When the program identifies a positive comment, it pulls out the material that the company can use in their marketing materials.

The cost to run the tool is small (hundreds of dollars per year), but the effort to create it was significant. Was it worth the CEO’s time to build the solution to get the testimonials? Probably not. But along the way, my friend learned more about how GPT-3 works and how it could be applied. He expects that knowledge will have higher ROI the next time he conceives an idea for using the AI.

GPT-3 to the rescue

Last Friday, I wanted to buy a turkey for Easter dinner. I phoned seven grocery stores to check if they had any available. No luck. So I went to Google and searched for “largest grocery stores in Seattle” (and variations of those terms), assuming that bigger stores would be more likely to have turkeys in stock. But Google kept showing me lists of the “best” grocery stores in Seattle.

So I tried GPT-3. Here’s a transcript of our “conversation” (with GPT-3’s responses in bold):

Q: Which grocery stores in Seattle have the largest number of square feet?

A: Safeway, QFC, and Grocery Outlet are the three stores with the largest square footage in Seattle.

Q: Which chain has larger stores, QFC or Safeway?

A: Safeway stores are larger than QFC stores

Q: Which is the largest Safeway store in Seattle?

A: The Safeway store at 3820 Rainier Avenue is the largest Safeway store in Seattle

I have no idea if the information GPT-3 provided was correct. Are Safeway stores really bigger than QFC stores? And I’m pretty sure the Grocery Outlet reference is wrong. But I called the 3820 Rainier store. They had a ton of fresh turkeys. GPT-3 saved Easter.

Keep it simple,

Edward

PS — In addition to my experiments using GPT-3 for writing a novel, I’ve played around with using AI for stand-up comedy jokes. (I was inspired by this GPT-3 routine where Jerry Seinfeld and Eddie Murphy talk about San Francisco, as well as this sketch about Larry Page and Peter Thiel discussing how hard it is to run a company.)

Here’s an example of a joke created by GPT-3:

"The hardest job in the world is being a mum. It's like being in the army but you're not allowed to shoot anyone."

And if you’re interested in trying AI tools for other applications, the place to start is OpenAI itself. The site Beta.openai.com will provide basic tutorials, give you immediate access to GPT-3 itself. For the earlier examples I shared about writing fiction, I used NovelAI, a “monthly subscription service for AI-assisted authorship, storytelling, virtual companionship, or simply a GPT powered sandbox for your imagination.”

Edward Nevraumont is a Senior Advisor with Warburg Pincus. The former CMO of General Assembly and A Place for Mom, Edward previously worked at Expedia and McKinsey & Company. For more information, including details about his latest book, check out Marketing BS.