Marketing BS: AI Language Models, Part 3: The (Near) Future

Good morning everyone,

Today’s essay wraps up a 3-part series on AI language processing. (Check out the Part 1 briefing and the Part 2 essay on ways that advanced language models might impact companies and the day-to-day lives of marketers)

—Edward

This Week’s Sponsor

Guide: What is SOC 2 and Why Do Startups Need It?

To land big deals, your business needs SOC 2. But getting SOC 2 compliant is complicated, time-consuming, and the cost can be crippling. Uncomplicate SOC 2 with our guide for growth-minded founders. Learn how to turn compliance from a pain point into a secret edge over your competitors.

What does this cost?

Some companies are already using GPT-3 to write blog posts, collect customer feedback, and more. In last week’s essay, I raised this question for marketers: “Will the increase in your team’s content output be enough to justify the cost of AI services?”

The answer depends on the complexity of your use case.

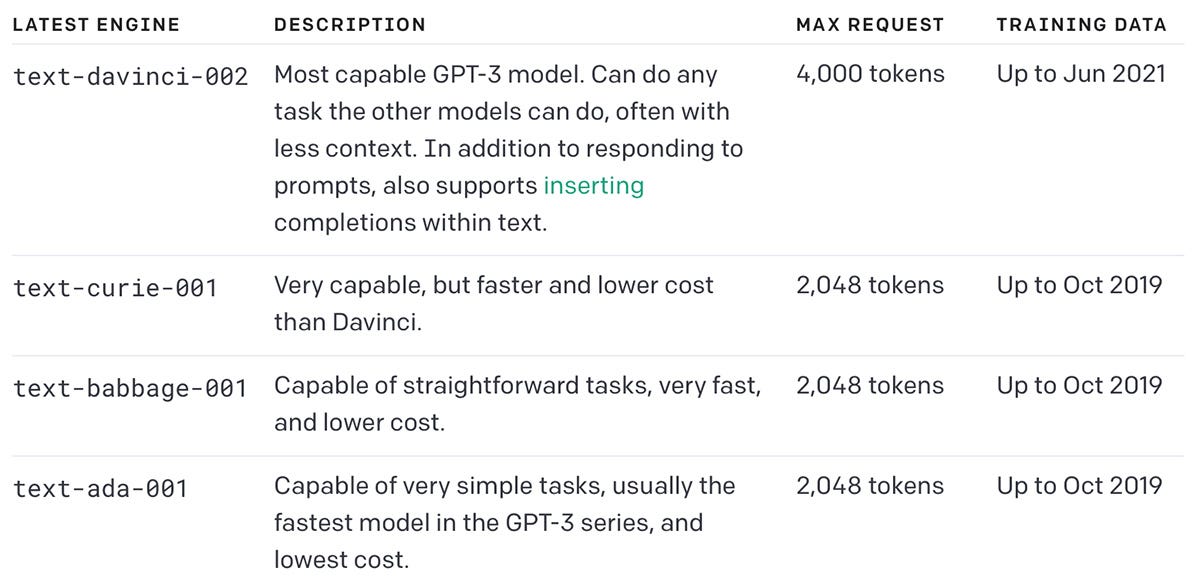

If you are interested in experimenting with GPT-3 for your business, you need to select one of its four models, named Davinci, Curie, Babbage, and Ada. Each one provides a different level of computing power — at commensurate price points.

Here is a comparison chart from the website of OpenAI (the creators of GPT-3):

In the above chart, you can see references to “tokens.” A single token represents a common sequence of characters; according to OpenAI, “one token generally corresponds to ~4 characters of text.” In practical terms, 1000 tokens could produce roughly 750 words.

Tokens function as a de facto currency for GPT-3. Users purchase tokens in increments of 1000. For GPT-3, the prices per 1000 tokens vary depending on the model:

Davinci — $0.06

Curie — $0.006 (as in, six-tenths of a cent)

Babbage — $0.0012

Ada — $0.0008

For individual purposes, the costs are negligible. You could produce a 1000-word blog post for less than 10 cents! In reality, the prices would rise if you experimented with different prompts and then revised the text with multiple iterations. Still, the prices for an AI-generated post would be drastically lower than one created by a human content writer.

Things can get expensive if the tools are run at scale, like reading every customer service request for a Fortune 500 company, or generating hundreds of blog posts per day. In scale situations, money can be saved by testing the effectiveness of the less expensive models, or by increasing the specialized training of a given model (which might allow shorter prompts).

Most companies that are building language-based tools do not charge “per call.” Instead, they sign clients up for subscription plans with monthly charges (which sometimes include usage limits). Could machine learning as a service (MLaaS) grow into a larger opportunity than SaaS?

The AI rivals

OpenAI isn’t the only company building models. There’s an expanding field of competitors and an escalating arms race for performance.

AI platforms are usually measured by their number of “parameters” (in non-technical terms, parameters are the connections between pieces of information). GPT-3 was built with 175 billion parameters. Rumors abound that OpenAI is working on GPT-4 — with 100 trillion parameters.

Here’s a roundup of the major AI rivals:

EleutherAI released a model with 20 billion parameters. (EleutherAI is the foundation for NovelAI, the storytelling platform I mentioned last week).

AI21 Labs’ Jurrasic-1 model has 178 billion parameters (slightly more than GPT-3).

Huawei built a Chinese-language model with 200 billion parameters.

As mentioned in my March 29 briefing, Google has announced PaTH-2, built on 540 billion parameters.

In addition, there are dozens more AI platforms, with many new entrants on the horizon. OpenAI shattered expectations of “what was possible” — just like Roger Bannister broke the physical (and psychological) barrier of running a 4-minute mile. Once an innovator pushes the limits of conventional thinking, it’s much easier for other parties to take existing techniques and “scale them up” for better performance.

In recent months, AI progress has continued at a remarkable rate. New companies aren’t just pushing the envelope for the number of parameters; they are also developing new ways to boost performance.

Until recently, AI experts thought more parameters = better models. All things being equal, that idea still holds true. However, the real cost for building a model is not the number of parameters, but the computer processing power. And new research from DeepMind (an artificial intelligence subsidiary of Alphabet) has challenged the conventional thinking about how AI systems should direct their computer processing power.

In late March, DeepMind published a paper that suggests for any given amount of computer processing power, the trade-off between “more parameters” and “more time training on content” has been misapplied. Nostalgebraist’s tumblr post explains what makes DeepMind’s report so exciting:

The top-line result is that current big models (GPT-3, Gopher, Megatron Turing-NLG) are too big / not trained on enough data – the optimal way to use the same compute is to train much smaller models for much longer.

They substantiate this by training “Chinchilla,” a 70B model with the same training compute investment as Gopher (280B) because it trained for much longer …

… which dramatically out-performs the big, under-trained models (Gopher, GPT-3) on downstream tasks.

In other words, training AI systems might be a more impactful use of resources than increasing the total number of parameters.

The implications for this (preliminary) conclusion are enormous. Future models can be improved without the substantial cost that is required to increase parameters; instead, companies can focus on the way the model is trained. In addition, the cost of computer processing power is continuing to come down, which will reduce expenses for companies building models of any given capability.

And we are still in the early days of HOW to build these models to maximize performance. The DeepMind paper shows just how much low-hanging fruit for improvements are still out there. You can expect to see further research presenting more solutions.

For marketers, each decrease in cost and increase in performance will create more viable use cases for each “tier” of the AI systems.

What’s coming next?

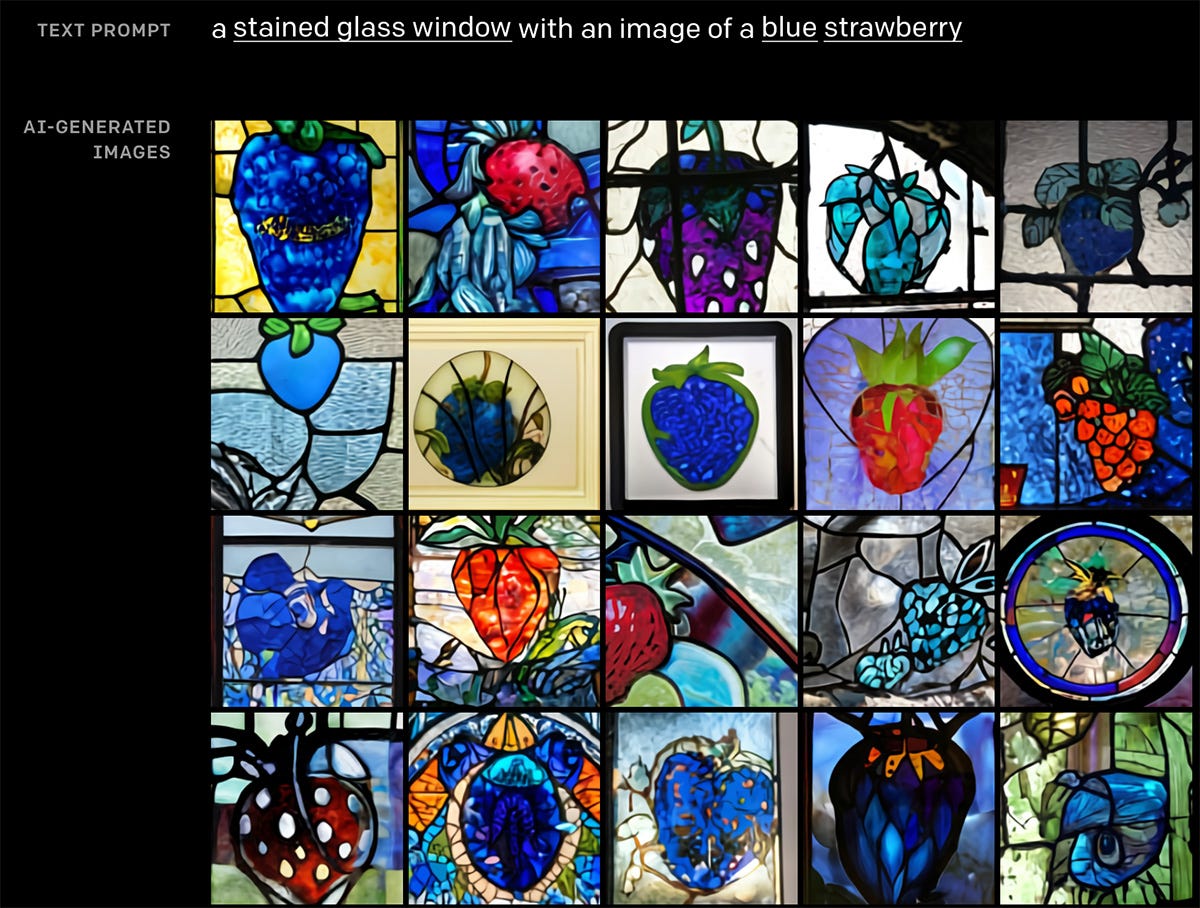

When costs drop low enough, I expect many applications of AI will be built into existing products. Suppose you need an image for your PowerPoint presentation — instead of searching a stock library, you could describe what you need, and AI will create a custom image. Making revisions could be as simple as telling the AI what to change, and the system will magically produce a new version. Your personalized image would be free of any copyright or trademark concerns (unless you intentionally asked the AI to break the rules). If you’re considering some professional development courses, I wouldn’t invest your time in learning how to use Photoshop…

As another example, AI tools will be embedded into word processing software. Not sure what to write? Just press a button, and the AI will generate the next few sentences (based on your previous content). Don’t like what the AI produced? Just press “redo” and receive a different version. You will have the option to adjust the parameters on how that text is created, but most users will keep the default settings (just like happens today for programs like Word).

AI tools could also transform your text in various ways. Here are some possibilities:

Eliminate passive voice

Translate into Spanish

Rewrite using iambic pentameter

Increase the reading level to Grade 12

Mirror the style of a Jerry Seinfeld stand-up routine

(Just don’t ask it to rhyme. At least not yet. I spent a good 2 hours trying to get GPT-3 to rhyme, and I achieved very limited success).

More broadly, traditional Google search is at risk. Why search the internet for an answer to a question, when you can just ask the AI? The larger AI models have already internalized the entire web, so in some ways they already “know” the answer to any question that has been asked online.

At this point, though, they often get it wrong — especially when you try and trick them, like this exchange from a friend of mine:

Human prompt: Who was the president *before* George Washington?

GPT-3 answer: John Adams

In this case, GPT-3 reads the prompt text and then builds a probability model for what the next word should be. Since there is no president BEFORE Washington (John Adams is the president AFTER Washington), the most common next “token” is “John.”

In addition to intentionally devious phrasing, the use of asterisks is another way to confuse the AI. Maybe the model assumed the question was wrong? Or perhaps the model interpreted the asterisks around “before” in an unexpected way (sarcasm)?

These types of problems, though, can be easily fixed with an understanding of how AI works. With some practice, you can create prompts that pre-empt the problems. Consider this example:

Welcome to the social studies test! If you don't know the answer, or if the question is not logical, write “N/A.”

Human prompt: Who was the president *before* George Washington?

GPT-3 answer: N/A

Google is not going to be disrupted by simple GPT-3 Q&A processes. But AIs can conduct search activities that would be almost impossible in other ways. A friend of mine uses GPT-3 to summarize Google searches, with prompts like this (combined with very simple backend code):

Go to Google.com

Search for Robert Moses and click on the top link

Take a screenshot

Answer the following questions:

Who is Robert?

Where did Robert work?

What is Robert best known for?

What is the call to action on the page?

Google, of course, recognizes the potential implications for AI-based search, and they are developing some of the most advanced models. For instance, Google Translate was built on 500,000 lines of code. The new version of their neural machine translation system uses just 500. And this blog post about the machine learning job market notes that, “Google and DeepMind have language models that are probably stronger than GPT-3 on most metrics.”

What are some ways you could use GPT-3 today (or in the very near future)? Here are two simple examples: (1) scan your entire website and notify you if any page has errors on it, or (2) add every product you list to a shopping cart and inform you if anything unusual happens.

You could also set the AI tools to repeat the activities daily (or hourly) to make sure everything is working. Sure, there are “normal” tools available that can perform similar tasks, but they tend to fail when the site is undergoing any sort of change (like new content). GPT-3-based tools have no problem dealing with new content. You don’t need to code it to a specific situation; you just tell it what to do in a similar way that you would tell a junior employee what to do. The difference is that GPT-3 doesn’t get bored of repetitive tasks, doesn’t slack off, and is a lot less expensive than even a minimum wage worker.

Perspectives and playgrounds

In an April 9 Bloomberg column, Tyler Cowen shared his perspectives about the future of AI. Cowen provides a few possibilities that are not very far away, like having an AI answer most of your email (and flag anything important for your attention). Imagine if both sides used email tools like that; you could have two personalized AIs engaging in conversations with each other, until they determined the content was important enough to bring to their “superiors.” (Not that unlike the way executive assistants operate today).

AIs could do something similar for online dating — swiping left or right on profiles and initiating conversations, until informing you when the other person seems engaged. The AI could also go through your photos and recommend which ones would attract the most attention. (Or even alter your photos to get higher engagement — and not just manipulating photos in dishonest ways. Studies have shown that photos with shallower depth of field get higher response rates on dating sites.) Unfortunately, online dating is a ladder/status game, so if everyone uses smart AIs, then no one is better off.

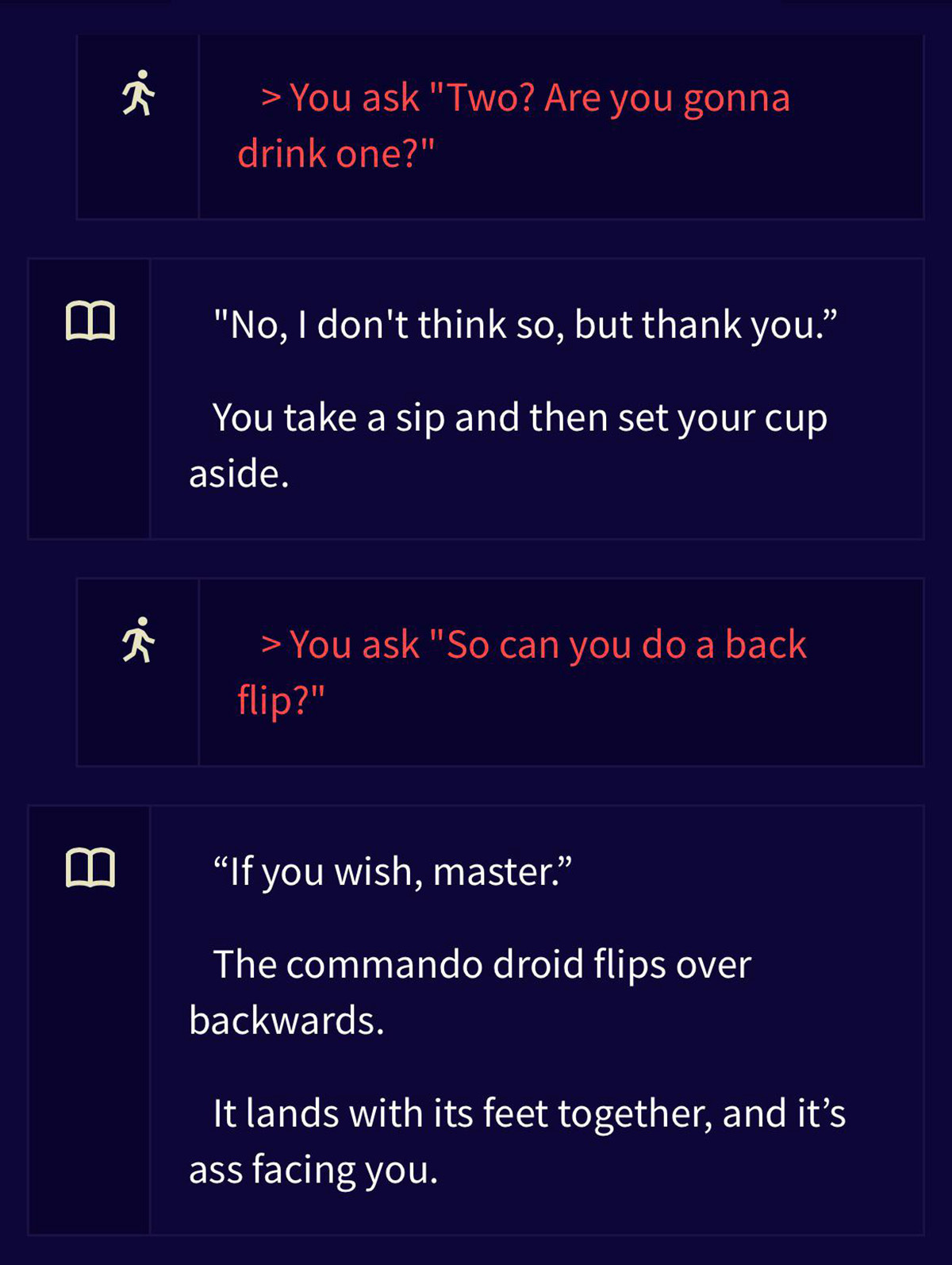

A few days later, Ben Thompson’s Stratechery post went even further into the future with AI predictions. He draws the parallel to social networking sites that started with text, moved to images, and then — as technology and computer power improved — became dominated by video. AI language models also started as text and now are moving into images. At some point, they will tackle video in a similar manner. Thompson hypothesizes what that will mean for video games: even the most “open world” games today have restricted options that need to be specifically designed by expensive developers. Once AI can create video, those limitations go away. A game could potentially be about anything and go anywhere. This sense of unlimitedness already exists for text-based games (AIDungeon was the first and NovelAI has an “adventure mode” that allows you to play open-ended games).

There’s a recurring problem with today’s text-based games: they have limited memory. The “next step” tends to make sense, but before long, the game forgets what happened a few thousand words earlier. But these are problems that will get fixed with improved computing power and more thoughtful design. AI doesn’t need to remember everything that happened; it can learn what things are “important,” store those things in memory, and forget the rest. Not unlike a human telling an epic story.

While we are still a long way from unique, personalized AI-created fully immersive VR games created on the fly, the AI revolution is happening right now. I think the current situation with AI might resemble what happened with personal computers in the 1970s — the technology is rapidly increasing, the costs (for given quality) are quickly dropping, but the use cases are somewhat limited. (“What use are computers other than for calculating big numbers and playing simple games?”). But the use cases right now are real, the potential use cases with current technology are real, the potential with only small improvements in technology will be significant, and the potential for technology improvement is large.

I encourage you to find an afternoon and experiment with the GPT-3 playground. Push the limits to see what you can get GPT-3 to do. And then keep your eyes open for use cases in your business. Most people are NOT doing that right now. Stand-alone AI-application companies are going to be challenged, not by technology, but by the ability to sell these new ideas to backward-looking companies. Nothing is stopping you from having your engineers build something with the tools as they exist right now.

I’m not a developer, but the potential for these models inspired me to learn enough of the basics that I can build simple tools powered by AI. I will never have the skills to build last year’s version of Google Translate, but I am pretty sure that after a month of learning Python, I could build something better on the back of GPT-3. What’s stopping you?

Keep it simple,

Edward

Edward Nevraumont is a Senior Advisor with Warburg Pincus. The former CMO of General Assembly and A Place for Mom, Edward previously worked at Expedia and McKinsey & Company. For more information, including details about his latest book, check out Marketing BS.

For more details on why rhyming is hard (including why novel iambic pentameter should be hard too), I found this discussion of BPEs helpful: https://www.gwern.net/GPT-3#bpes