Models versus Experiments

Good morning everyone,

At the bottom of last week’s post, I included some insights about the current state of executive recruitment. Following today’s newsletter, you can read the perspective from another executive recruiter.

—Edward

Models go mainstream

Two week ago, researchers from Harvard University published a report about COVID-19 transmission. One of their suggestions is startling: “prolonged or intermittent social distancing may be necessary into 2022.”

Two thousand twenty-two.

But how did Harvard’s scientists determine that date? And in the bigger picture, how can researchers ever forecast the future with a reasonable degree of certainty? (Assuming, of course, that science labs aren’t hiding crystal balls).

To track the spread of COVID-19, researchers are developing sophisticated models. Using a complex program that studies past events and analyzes current trends, scientists can run their models to simulate future possibilities.

Models are commonly used by researchers from a wide range of academic disciplines (economics, climate change, demographics, etc.). But in the fight against a contagious pandemic, models have appeared front and centre in our lives:

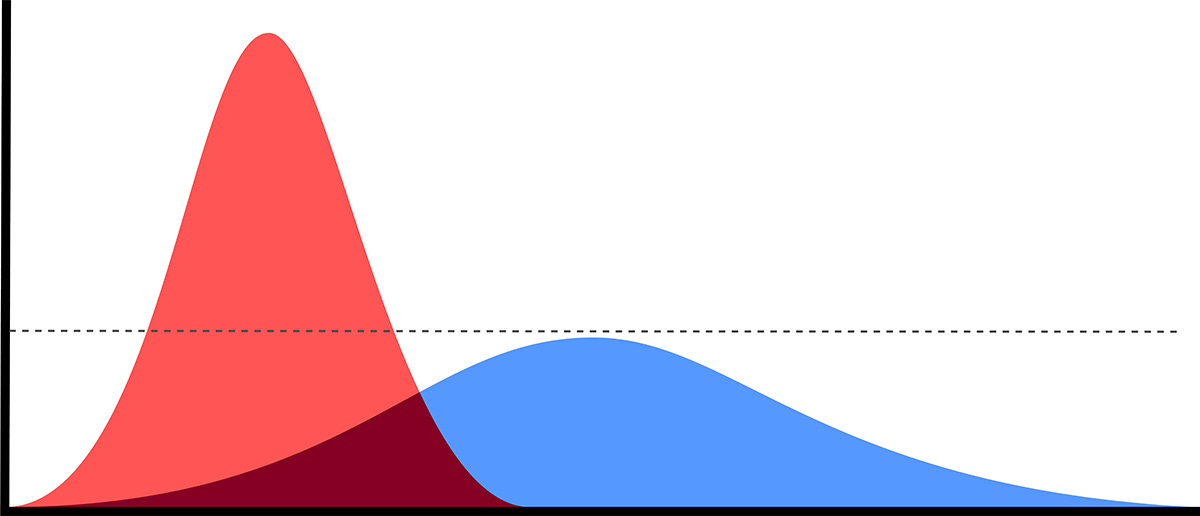

For many people, the concept of “flattening the curve” is the first time they have examined a mathematical model since their high school days.

Back in March, the models developed by Neil Ferguson and his team at Imperial College were instrumental in convincing world leaders to take drastic actions.

In blog posts and newspaper articles, you can find models that predict the impact of closing restaurants, opening schools, or cancelling sports leagues.

Despite the valuable insights provided by many models, we need to remember that they are just that — models. They cannot predict the future with complete accuracy, especially because of the challenges involved in designing a mathematical model:

The world is complicated: You’ve probably heard of “the butterfly effect,” where “small variances in the initial conditions could have profound and widely divergent effects on the system’s outcomes.” In Washington state, a choir practice — which could have been easily cancelled — spread the virus to an astonishing number of people. One small event, one massive result. You cannot build a model to predict all of the micro-decisions in daily life.

We don’t know the parameters: Researchers design models using known facts, which change over time. At first, analysts believed that (1) the virus was not spread as an airborne aerosol, (2) asymptomatic carriers were not contagious, and (3) masks were ineffective. All of those theories have been upended, rendering models’ early parameters incorrect.

They are reliant on math: In business school, we learned about the Black-Scholes equation of financial modeling for derivatives. The equation relies on key assumptions: (1) all prices move continuously, (2) prices have a “lognormal distribution,” and (3) volatility is constant over the length of the contract. We KNOW those assumptions are false, and yet Black and Scholes won the Nobel prize for their work. Why? Because their assumptions allowed for the very possibility of creating a mathematical model to solve a problem. Most COVID-related models follow symmetrical mathematical curves — they show an increase at some rate, followed by a leveling off, and then a decrease at the equivalent rate. Will this happen? Maybe. Or maybe not. The important thing to understand: these models use symmetrical mathematical curves not because of some magical power of prognostication, but because researchers used familiar (but potentially inaccurate) math concepts to design the models.

We don’t know how people will react: “The Lucas critique” suggests that people who learn about projections (like a model) will adjust their behavior. In the stock market, people discuss the “January Effect” — the perceived seasonal price increase at the start of each year. In response to the popularization of this theory, many financiers have attempted to take advantage of the situation (if everyone knows that stock price will go up on January 1, they started making purchases on December 31). Once people saw models communicating the idea that going outside increases the risk of COVID-related deaths, what happened? People stopped going outside. Models cannot anticipate abrupt changes in personal behavior.

Models and mitigating risk

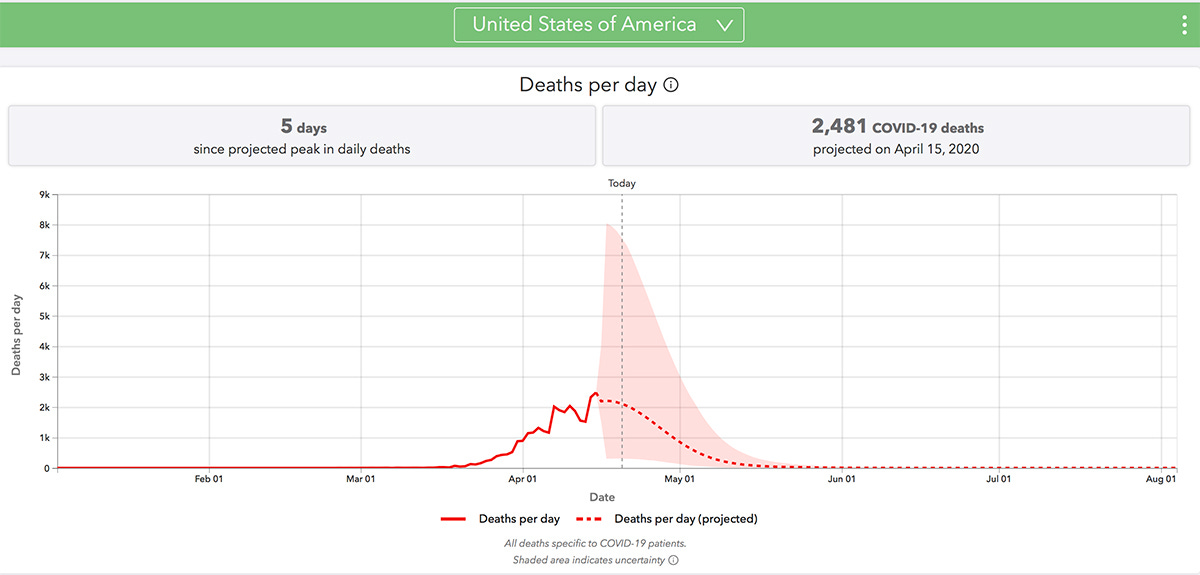

The Institute for Health Metrics and Evaluation published a report that projected the number of COVID-related deaths in America. The report has influenced the White House’s perspective on several important decisions — including when to “re-open America.”

The problem? Many sources identified flaws in the IHME’s research.

In an April 17 article for StatNEWS, Sharon Begley delivered scathing criticism:

IHME uses neither [of the two common modeling methods]. It doesn’t even try to model the transmission of disease, or the incubation period, or other features of Covid-19, as SEIR and agent-based models at Imperial College London and others do. It doesn’t try to account for how many infected people interact with how many others, how many additional cases each earlier case causes, or other facts of disease transmission that have been the foundation of epidemiology models for decades.

Instead, IHME starts with data from cities where Covid-19 struck before it hit the U.S., first Wuhan and now 19 cities in Italy and Spain. It then produces a graph showing the number of deaths rising and falling as the epidemic exploded and then dissipated in those cities, resulting in a bell curve. Then (to oversimplify somewhat) it finds where U.S. data fits on that curve. The death curves in cities outside the U.S. are assumed to describe the U.S., too, with no attempt to judge whether countermeasures — lockdowns and other social-distancing strategies — in the U.S. are and will be as effective as elsewhere, especially Wuhan.

To be clear, I am NOT arguing that the economic shutdown was an overreaction (or an underreaction). When you are facing (1) a wide range of possible (and catastrophic) outcomes and (2) complete uncertainty about your current situation, then caution is always the prudent course of action. If nothing else, an abundance of caution provides additional time to secure more data, resulting in better, more informed decisions. (And in this case, extra time to produce more masks and other supplies).

If we continue to treat models like “the truth,” then we will not push ourselves to gain a deeper understanding of how the virus really works — or, more importantly, what steps we should take to mitigate risk. In many cases, determining an effective action plan is easier to accomplish than unravelling complex virological mysteries.

Garbage in, garbage out

In the business world, people use the term “black box” to describe computer programs that transform various pieces of data into specific recommendations. The “black”-ness refers to the often-opaque design of these programs: how they analyze information and synthesize results.

In many cases, financial models are just overcomplicated multilinear regressions with limited ability to predict the future. When working with companies, I regularly encounter “Marketing Mix Models” (commonly referred to as MMMs). These programs attempt to estimate the relative impact of different marketing activities or “channels.”

For instance, Hallmark Cards might design an MMM to figure out the relative impact of their different marketing spend. Simple versions of these models will often overweight whatever marketing activities are executed immediately before big events (e.g., an MMM for Hallmark would probably show that television spent directly before Mother’s day was very effective).

More sophisticated versions consider additional variables like promotions and competitor activity. The ability to include myriad factors actually creates a problem: with so many variables, the models cannot adequately identify which specific factors were responsible for which specific impacts.

Returning to the Hallmark example, was it really a television campaign that boosted sales? Maybe people would have purchased cards anyway, with or without exposure to TV commercials. The black box spits out the answer, without giving companies the clear information to understand HOW and WHY the answer was provided.

So what’s the alternative to MMMs?

Instead of using an MMM to determine the impact of television spend, a company could run an experiment in a half-dozen cities:

Keep your marketing activity flat across most of the country, but dramatically increase your television spend in select “test” cities.

Measure your sales city-by-city to see if (and by how much) the test cities saw a bump in sales.

You can “attribute” any sales bumps to your TV ads.

There’s an obvious obstacle for this experiment: cost. Running regional TV ads are more expensive per impression than national TV ones. As such, a constant series of experiments would be would be very expensive. But, you could test your strategy ONCE, and then use the results to guide future practices.

The key is to collect as much data as you can when you run that single experiment. For example, your TV spots could include an offer code. By comparing how many customers used the code with the total lift you saw in those test cities, you might find that total impact was 10x the measured impact from the codes. Now when you run national TV campaigns, you can ASSUME that the total impact will be 10x whatever you measure with the code. You have a parameter to include in future models (i.e., measure code redemptions and multiply by 10 to get total impact from any television spend).

Companies can implement this tactic for every marketing channel. And then you can run some of those experiments again in a year or two and see if anything has changed. Note that experimentation is not perfect: What about interaction effects? What about diminishing returns? What about changing consumer tastes?

Despite the limitations with experiments, companies can still learn more by running tests than by trusting black box MMMs.

Black boxes models and new experiments

Let’s apply the ideas of black boxes and experiments to COVID-related topics. Medical researchers input data points and the model — a black box — creates a visual prediction of future trends. Both individual citizens as well as government leaders trust the information, even if few people can explain the model’s methodology and design.

What’s missing from the process are clearer parameters for experiments. In the previous discussion of marketing strategies, I used the example of code redemptions to evaluate the total impact of television advertising. To fight the pandemic more effectively, we need more experiments with more “offer code” equivalents.

Researchers ARE conducting experiments right now. An April 17 article in the Wall Street Journal summarized the findings from a preprint (i.e., before peer review) submitted by a team at Stanford University.

On April 3 and 4, the team collected blood samples from 3300 people in Santa Clara County, California. Researchers then analyzed the samples for the presence of antibodies (which could indicate how many people were previously infected with the coronavirus). The results were shocking: more than 50–80x people in the county had been infected with the virus than had been officially diagnosed.

If the conclusions from the Stanford experiment were not only accurate, but also applicable nationally, then the death rate from COVID-19 would be 50–80x lower (about 0.12–0.2%, which is ~20% to 2x the flu). A substantially lower-than-believed death rate could justify re-opening our economy in the very near future.

BUT…as anyone with lab experience will tell you, “This is just one experiment! You need to conduct hundreds of experiments, critiquing and iterating the process.”

Biotech expert Balaji Srinivasan (who I have quoted before in these letters) shared his insightful skepticism about the Stanford study. In another piece of criticism, this post assesses the experiment’s (lack of) statistical reliability.

In other experiments, researchers are testing things like how long COVID-19 can survive on different surfaces and how well the virus survives at different temperatures.

We can also observe the results of NATURAL experiments. Countries around the world have handled the pandemic in a variety of ways; some countries have followed (mostly) similar game plans, while other nations pursued very different strategies.

Over time, we can evaluate the effectiveness of each government’s response, and (hopefully) understand which specific actions yield the greatest results. At the moment, unfortunately, we are often left scratching our heads:

Japan has fared surprisingly well given their lack of coordination. Have we undervalued mask use? Or can we attribute their lower transmission rates to the policy of closing schools but keeping restaurants open?

Sweden opted against a shutdown of business, but individuals appear to follow protocols with more seriousness than some countries with enforced stay-at-home measures. How do we explain why Sweden’s number of cases is growing at a much slower rate than Italy (but still much faster than neighboring Denmark)?

We can also look at natural experiments in the past. During the 1918 Spanish Flu outbreak, US cities responded with a wide range of policy responses (National Geographic recently published an article with illustrations that highlighted the striking contrast between cities).

The bold experiment

On March 21, economist Arnold Kling wrote a blog post describing an audacious experiment:

Take 300 volunteers who all test negative for the virus… Put 100 of the volunteers in a room with someone who has the virus but is asymptomatic. Make sure that they all get a chance to get close to the infected person.

Put 100 of them in a room with someone who is symptomatic. Make sure they get a chance to get close to the infected person. Next, have a symptomatic victim cough on his hand and touch a doorknob 100 times, meaning 100 different doorknobs. Then have the last 100 volunteers each touch one of the doorknobs and then touch their faces.

Over the next few days, measure the difference in infection rates.

Kling’s experiment mirrors the television test I explained earlier. By simulating a real environment — with clear control groups — you can gain a deeper understanding of the situation. For the current pandemic, researchers could run dozens of experiments to test the impact of things like re-opening schools or restaurants. In the same way that testing TV campaigns is pricey, these COVID experiments would also be expensive — but instead of money, the cost would be human safety.

At present, experiments like the one described by Kling are unlikely. First of all, there are legal issues with the idea of paying human subjects to expose themselves to a potentially lethal disease. Plus, most people oppose — for good reasons — the use of medical trials outside the strict ethical guidelines of governmental and academic organizations.

But we are currently facing an unprecedented situation. Countries are being forced to choose when and how to re-open their businesses (which could increase the number of deaths), or to continue a shutdown that’s devastating the economy (which will also impact many people’s health in negative way).

As the pandemic spreads, our society is reconsidering some opinions and taboos. States and counties are allowing doctors to cross borders without local medical licences. Google and Apple are building a tracking tool that would have been considered — just a few short weeks ago — a massive invasion of privacy.

Perhaps the taboo on experimentation with human subjects might be broken soon as well?

And make no mistake, even without formal experiments like Kling’s, we are still knowingly putting some people in dangerous situations. We are asking medical workers to risk their lives. We are testing unproven vaccines on human volunteers. And we are sending the global economy into a coma, causing extreme hardship to individuals around the world.

To me, recruiting paid volunteers to help us learn more about COVID seems like a no-brainer. We can come together to celebrate the volunteers as heroes. How different would the situation be than asking volunteers to join the military and serve overseas?

Just like the TV experiment, an investment in a well-designed experiment can save a lot of money (and lives) in the future. From a cost basis, you can clearly identify COVID’s risk profiles by age. By limiting the pool of volunteers to young people with no co-morbidities, researchers could keep the fatality rate well below 0.1%.

Every day, we see cracks in stay-at-home policies; the world will not accept a social distancing lockdown for the next 18-ish months until a vaccine is widely available. Re-opening businesses seems like a question of “how soon,” not “if.” Wouldn’t it be prudent to know the relative costs of different strategies? Which is “safer” to re-open: restaurants, retail, or schools?

Knowledge is our greatest weapon to fight COVID; we need to leverage all of the tools available.

Keep it simple and stay safe,

Edward

Career Information

Noah Roth is an executive recruiter specializing in positions filled by top-tier consultants (generally Bain, BCG, and McKinsey). He recently filled me in on his impressions of what is happening in executive recruiting right now. The remainder of this note is from Noah:

1) Function — I’m seeing a lot of demand for anything on the cost side while companies weather this storm. I saw a similar pattern in ’08. At least among ex-consultants, there was a lot of demand for lean, supply chain, transformation, operational excellence, and change management. The boom on the revenue side came once companies were convinced that they were as lean as possible couldn’t cut additionally to get to profitability which was also around the time they saw demand increase. That’s when you’ll see more marketing and BD roles emerge.

2) Industry — Obviously travel, tourism, hospitality, etc. are in trouble, but there are industries poised to grow. Look for hiring from healthcare, ecommerce, and sort of remote service providers like tele-health, e-learning, and remote conferencing platforms.

If you are enjoying Marketing BS, the best thing you can do is share it with friends and recommend they subscribe. The next best thing you can do is comment on this piece or click the heart (which makes this article more visible for other readers).

Edward Nevraumont is a Senior Advisor with Warburg Pincus. The former CMO of General Assembly and A Place for Mom, Edward previously worked at Expedia and McKinsey & Company. For more information, including details about his latest book, check out Marketing BS.