A common thread across my career is coming up with “different” ideas and then trying to make them happen in large organizations that are often resistant to anything happening. Often times the thing I WANTED to do could not be done, so I tried to figure what I COULD get done within the limits that the organization was creating.

As an example, when I was at Expedia I led loyalty and email marketing. My team had lots of ideas for driving the business, but as you would expect from a online seller of travel, almost all of the ideas involved making changes to the website. At the time making any change to the website was a huge undertaking. This was back in 2010. We wanted to add a “share my trip on Facebook” button. I was told that would be a 4 month project and cost $400,000. We did not add the button. Instead we looked for things we could do that had minimal or zero impact on the website. Stuff we could do that did not take developer time. I think one of the more impressive achievements was creating the VIP Hotel program. We convinced (or “allowed”) ~10% of the hotels in any given market to become VIP hotels. The hotels guaranteed that Expedia Elite customers would get upgraded to the best available room on any stay booked through Expedia. Getting that program off the ground was very very difficult, but it involved only one small change on the website (and a lot of human labor in the background).

The point is that sometimes you can’t do the best, most obvious thing, but that doesn’t mean you should give up an do nothing. There is always something you can do to make things better in any organization.

I was reminded of this when I saw this Twitter thread last weekend (click through to read it all):

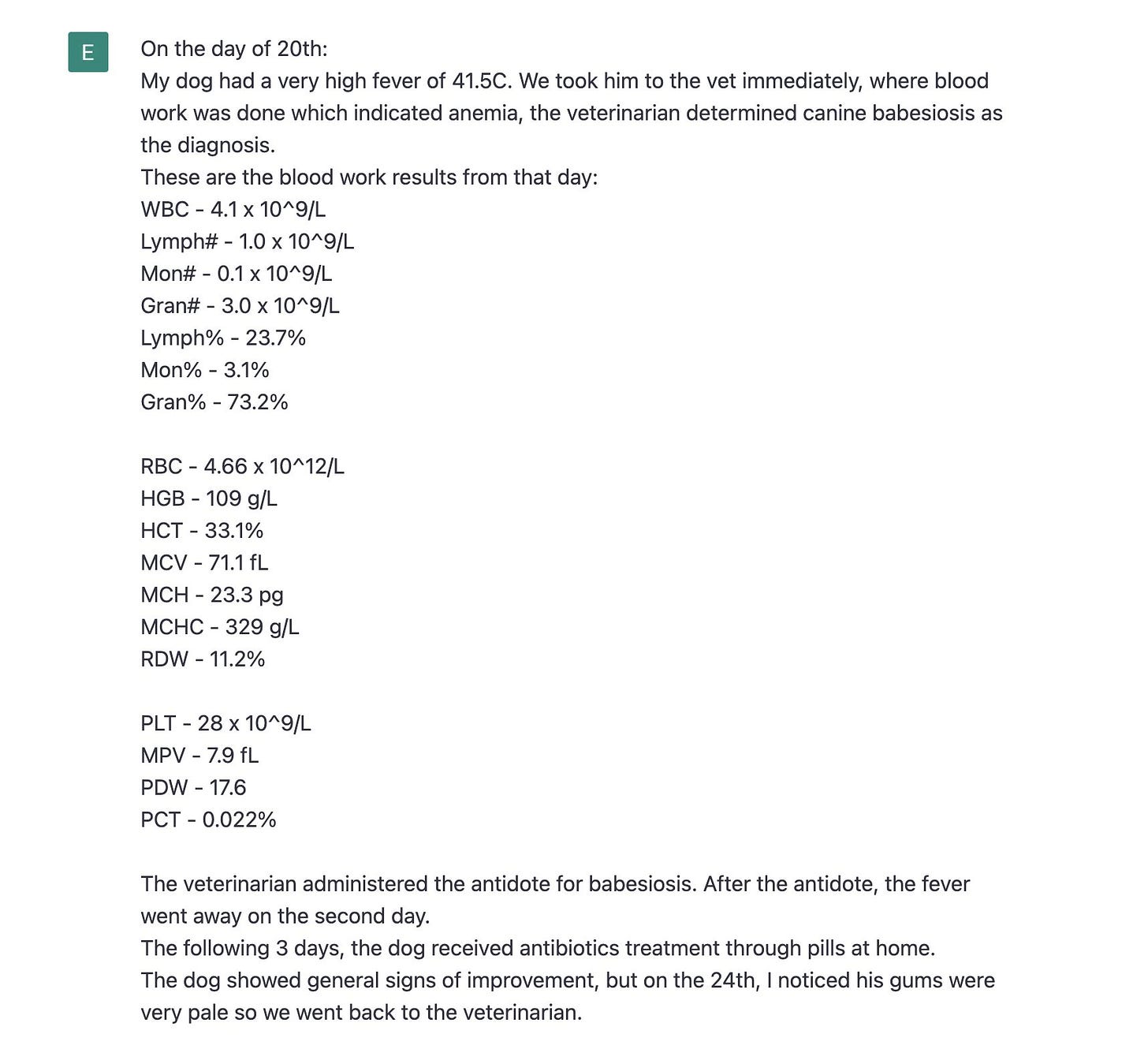

Cooper’s dog got sick and the treatments proposed by multiple vets did not seem to be working. Finally Cooper plugged in the dogs symptoms and, and this is where it is super interesting, the actual blood test results, into GPT-4:

GPT-4 modestly admitted that “it was not a veterinarian”, but then provided a analysis of the test results. It said that the dog likely had anemia, but because the dog did not respond to treatment, there was likely some sort of “underlying condition”. Cooper asked what the underlying issue could be and GPT-4, and after re-iterating that it would be better if Cooper talked to an actual veterinarian, listed three possibilities:

Co-infections

Internal bleeding

IMHA (Immune-mediated hemolytic anemia)

The vets had already considered co-infection and internal bleeding and done the tests to rule them out. But neither vet had mentioned IMHA. Cooper took his dog to a third vet and mentioned IMHA. The vet agreed it was plausible. They ran the tests, verified the diagnosis, and saved the dogs life.

Do what we can

I have a suspicion that self-driving cars are already safer than humans. Telsa has reported that cars in autopilot have one accident every 4.85 million miles, while driver’s not using auto-pilot have one accident every 1.4 million miles. This is not perfect proof as perhaps people use autopilot only in safer conditions (or only on freeways, where more miles are travelled per minute), but when you hear about Telsa accidents it is always about when the autopilot did something weird rather than when the a human did something stupid.

Regulations will stop self-driving car rollout until self-driving is 10x or 100x safer than human driving (and it may not even be allowed then. We still take off our shoes before we board airplanes).

I expect regulations will also limit or delay the ability to have AI-doctors. There are already restrictions on what nurse and nurse practitioners are allowed to do (NP’s can’t even prescribe medication). I do not know how long it will be before AI is allowed to do “doctor things”, but I would bet it will be some time after they let nurse practitioners do it…

It is pretty clear that, though we care about our pets, we are far more willing to take risks in their care than we are with ourselves. If I was going to use LLM AI in the medical field, I would start with animal care before human care. While AI has the potential to dramatically improve the quality and reduce the cost of medicine, this may be a case where we need to start with what we CAN do before getting to what we WANT to do.

One more piece of evidence: The first comment on Cooper’s thread was someone concerned that they would not want OpenAI to get access to their medical records for privacy reasons.

Keep it simple,

Edward